An Improved Neural Network Model Architecture

Noah M. Kenney

In the artificial intelligence field, it is important to consider the role of neural networks, which allow computers to process information in a way that attempts to mimic the human brain.

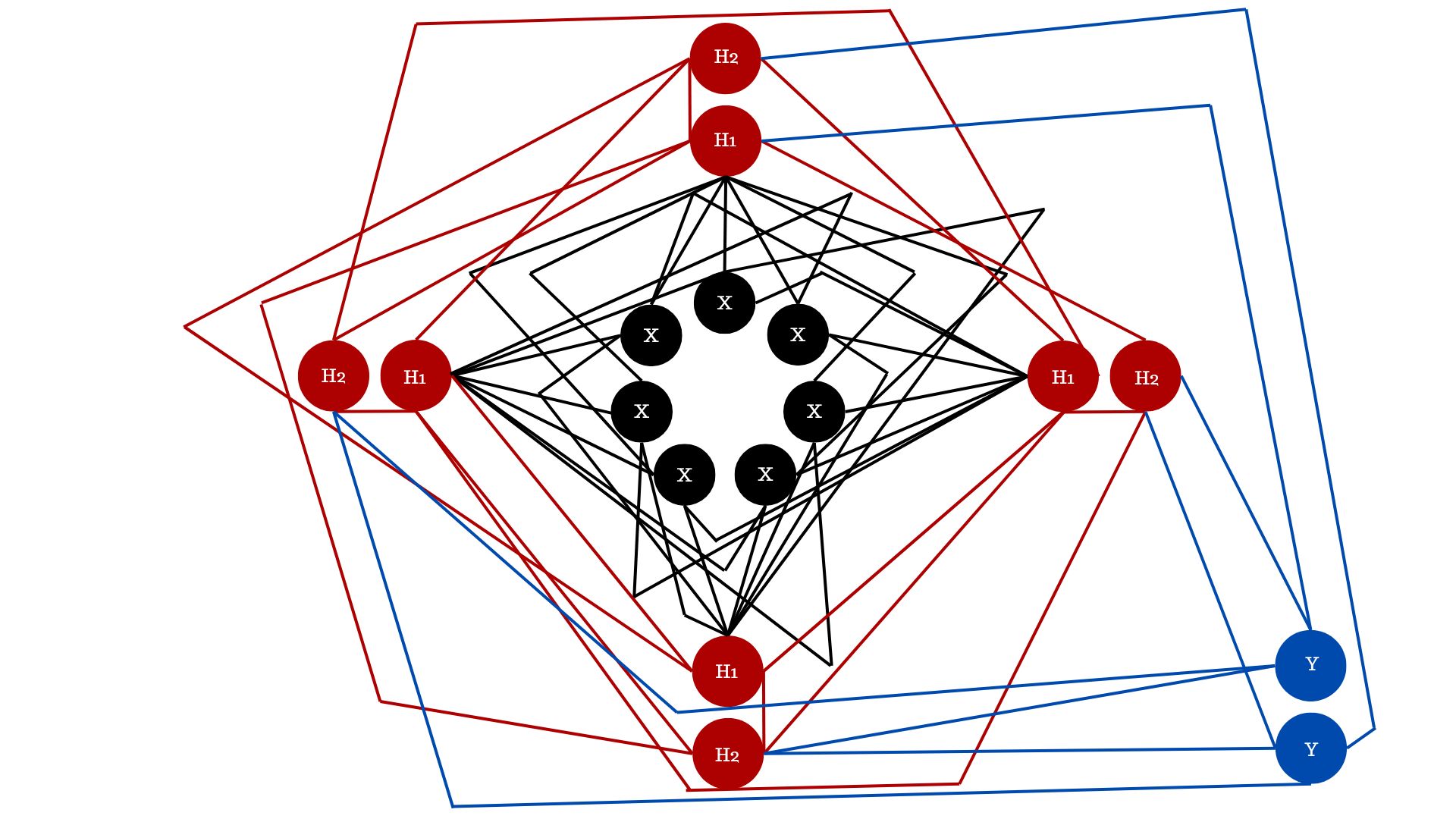

In the artificial intelligence field, it is important to consider the role of neural networks, which allow computers to process information in a way that attempts to mimic the human brain. Traditionally, we are used to seeing neural networks depicted in a linear fashion with neuron density decreasing with the progression throughout the layers (input layer > hidden layers > output layers). This neural network structure has a significant flaw. This linear progression fails to account for what we could refer to as "pass-through nodes, or neurons". A pass-through node is capable of receiving data, without including it in the output layer.

Today, this presents negligible practical ramifications, given the typical uses of neural networks, relative availability of computational power, and optimization of the input layer (or dataset).

However, as we continue to increase the number of neurons contained in the input layer, utilizing pass-through nodes or neurons could drastically improve efficiency between the time the input layer (x) is translated to the output layer (y) by mathematical operators.

For this reason, some of my recent work has been in developing a neural network structure that is loosely based on the design of atoms and molecules, allowing for a circular flow of data. This has the benefit of allowing for pass-through nodes (increasing efficiency significantly) and allowing us to more accurately predict the binary state of a particular node in advance (0 or 1).